Generative modeling is a powerful area in artificial intelligence, particularly when it comes to creating complex and high-quality data such as images, audio, and text. One of the most significant advancements in this field has been the development of diffusion models, which are particularly noteworthy when combined with Transformer architectures. This article will delve into how these models work, their architecture, and their unique capabilities.

Understanding Diffusion Models

The Core Concept

Diffusion models are generative models that define a data generation process by gradually transforming a simple distribution into a more complex one, typically resembling real-world data. The process involves a series of steps where noise is added to data, and then a reverse process is applied to recover the original data from the noisy version.

Forward and Reverse Processes

Diffusion models consist of two main processes:

- Forward Process: This is a gradual corruption of the data by adding Gaussian noise at each step, making the data increasingly noisy and eventually resembling pure noise.

- Reverse Process: The reverse process is learned using deep learning techniques, where the model tries to reverse the noise addition to recover the original data.

In essence, the model learns to denoise the data, effectively generating new samples that resemble the original data distribution.

Transformers in Diffusion Models

Role of Transformers

Transformers have revolutionized the field of natural language processing and have also found applications in generative models, including diffusion models. The core strength of Transformers lies in their ability to handle long-range dependencies through self-attention mechanisms, making them highly suitable for tasks where context and coherence over long sequences are crucial.

Integration with Diffusion Models

In diffusion models, Transformers can be used to model the reverse process, where their ability to capture complex dependencies helps in effectively learning the denoising steps. The self-attention mechanism enables the model to focus on different parts of the input data when generating new samples, allowing for more accurate and contextually appropriate results.

Architecture of Diffusion Models with Transformers

Basic Architecture

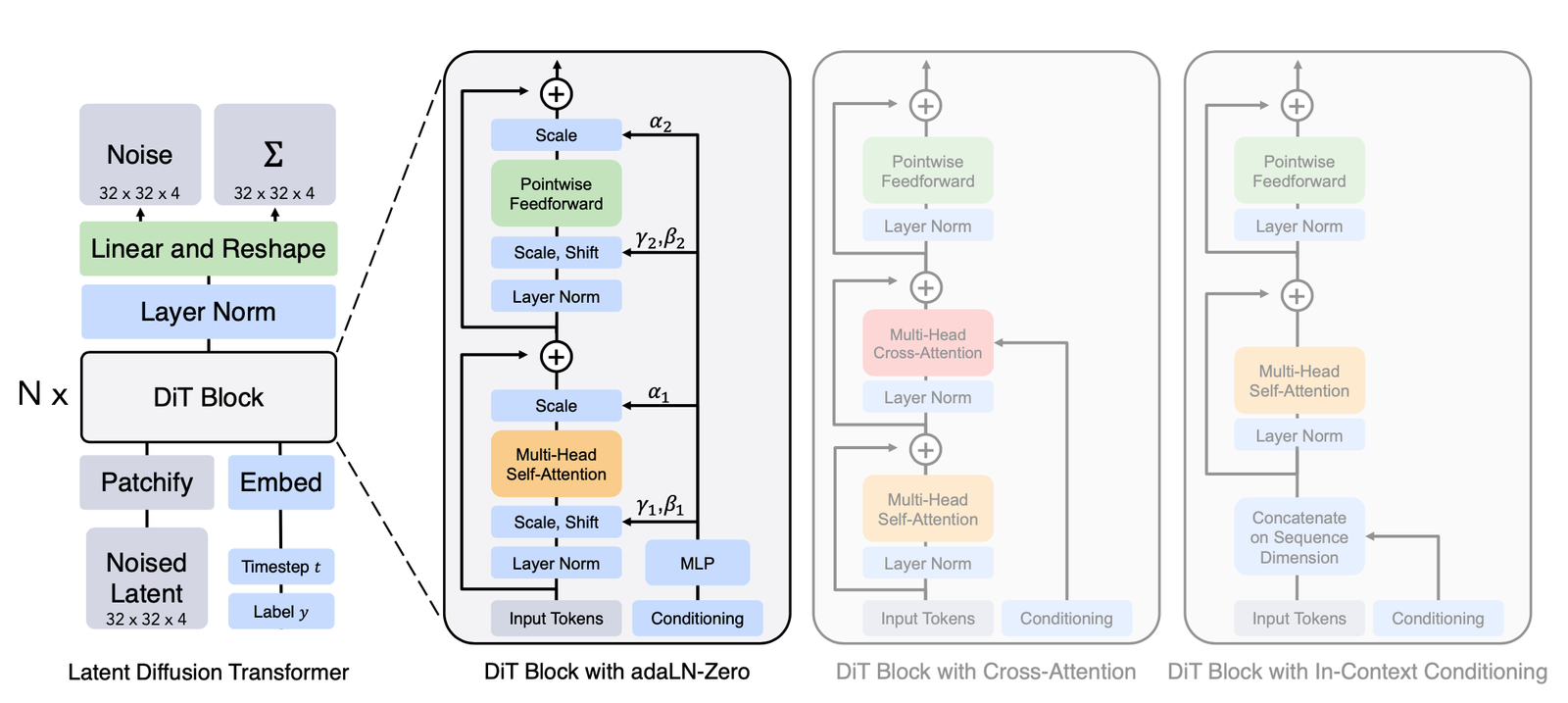

The architecture of a diffusion model integrated with Transformers typically involves the following components:

- Noise Scheduler: Determines the amount of noise to be added at each step in the forward process.

- Transformer Network: Used in the reverse process to model the denoising steps. The network is trained to predict the noise that was added at each step, allowing it to iteratively recover the original data.

- Latent Variables: These are intermediate representations of the data at different noise levels, which the model refines through each step of the reverse process.

Training Process

Training a diffusion model with Transformers involves minimizing a loss function that measures the difference between the added noise and the predicted noise at each step. The training is typically performed using a large dataset, where the model learns to generate high-quality data samples by iteratively refining its predictions.

Applications and Advantages

High-Quality Data Generation

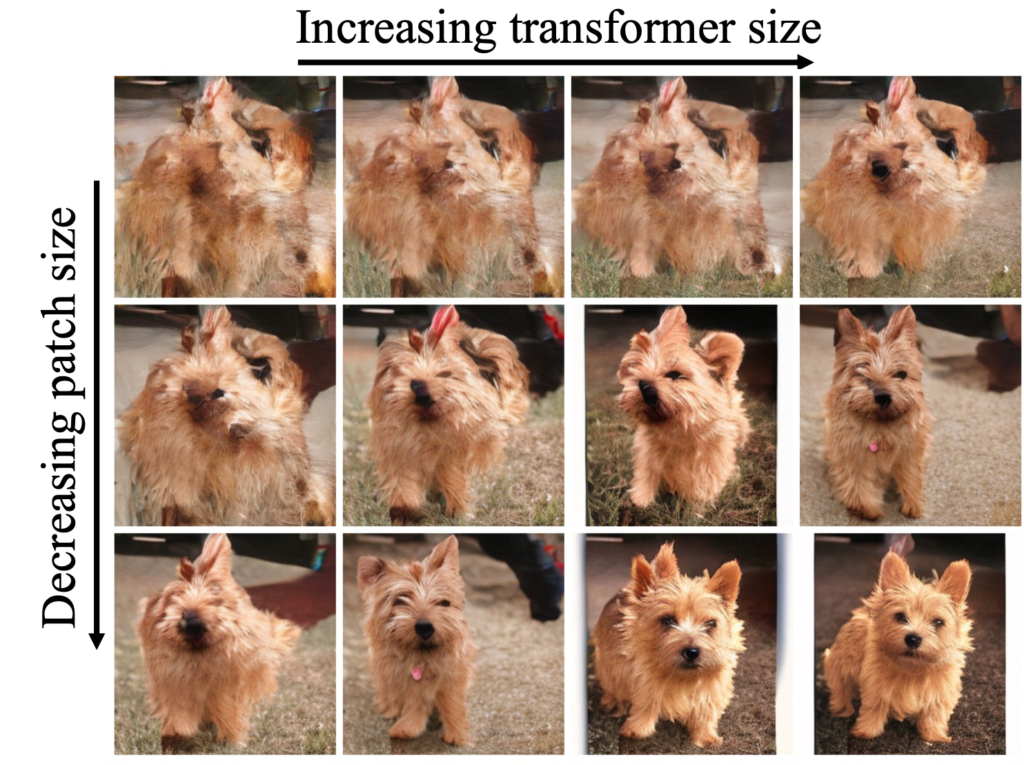

One of the main advantages of using diffusion models with Transformers is their ability to generate high-quality and complex data, such as images with fine details or coherent text sequences. The integration of Transformers allows the model to leverage the rich contextual information, leading to more realistic and contextually appropriate outputs.

Versatility Across Domains

These models are highly versatile and can be applied across various domains, including image generation, audio synthesis, and NLP. The flexibility of Transformers in handling different types of data makes them an ideal choice for enhancing the capabilities of diffusion models.

Challenges and Future Directions

Computational Complexity

One of the main challenges in using diffusion models with Transformers is the computational complexity, particularly in terms of training time and resources. The iterative nature of the diffusion process, combined with the heavy computation of self-attention mechanisms in Transformers, can be demanding.

Ongoing Research

Research is ongoing to optimize these models, making them more efficient and scalable. Techniques such as pruning, quantization, and the development of more efficient Transformer variants are being explored to address these challenges.

Conclusion

Diffusion models combined with Transformers represent a significant advancement in generative modeling. They offer a powerful tool for creating high-quality, complex data. Their ability to leverage the strengths of both diffusion processes makes them highly effective across a range of applications. We can expect even more sophisticated models to emerge, pushing the boundaries of what is possible in AI-driven data generation.

This article provides an overview of diffusion models with Transformers, explaining their working mechanism, architecture, and applications. As these models continue to develop, they hold great potential for advancing the field of generative modeling in AI.

Read related articles: